Working with Dynamics 365 Finance and Operations CSV Files in Azure Data Lake and Power BI without Common Data Model

When bringing in Dynamics 365 Finance and Operations data from Azure Data Lake Storage (ADLS) to a Power BI report, ideally you’ll be able to use the Common Data Model (CDM) functionality.

Sometimes, however, your ADLS setup doesn’t have CDM available, and you are stuck using File System View. Don't panic, because your data will still be usable, but let’s discuss how you can bring that data natively into Power BI Desktop and how you can intervene if there are any parsing issues.

How to Create Your Common Data Model

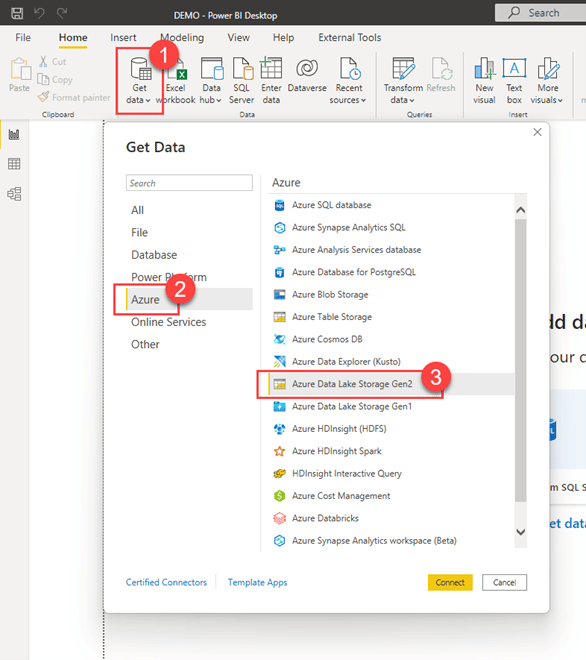

Let’s step back and quickly refresh how we connect to ADLS and confirm whether we can use CDM. Create that new report with Power BI Desktop, Get Data and locate our ADLS Gen2 connector.

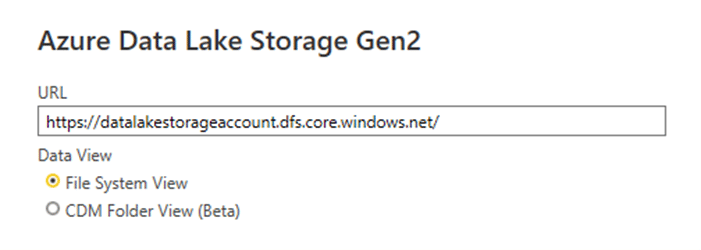

From here, enter the DFS Endpoint for your ADLS, which should be in the format of: https://{datalakestorageaccountname}.dfs.core.windows.net/

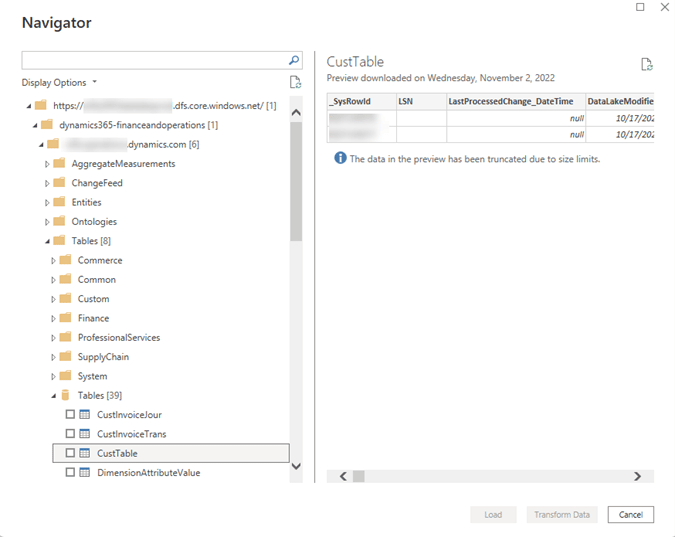

Part of the CDM Folder View magic is there are JSON files that Power BI Desktop can recognize and use to bring in your data with more structure. Below is a quick example of what we will see if CDM Folder View is an option:

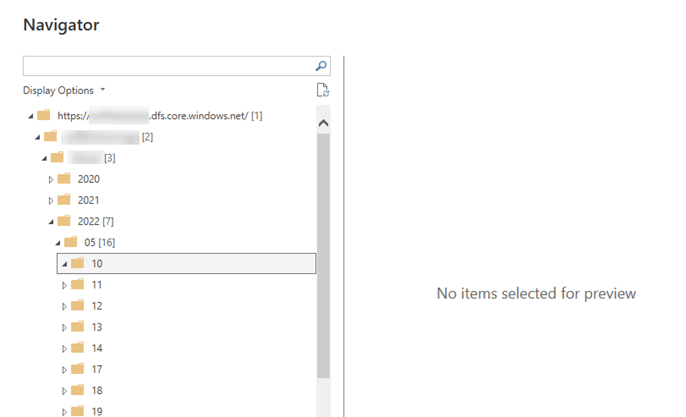

However, if the Navigator presents our ADLS table structure in this fashion (below) with no data/tables to select, we’ll have to pivot to the File System View.

That may sound like a headache, but it is not uncommon for an ADLS set up for an older version of Dynamics 365 Finance and Operations.

How to Pivot to a File System View

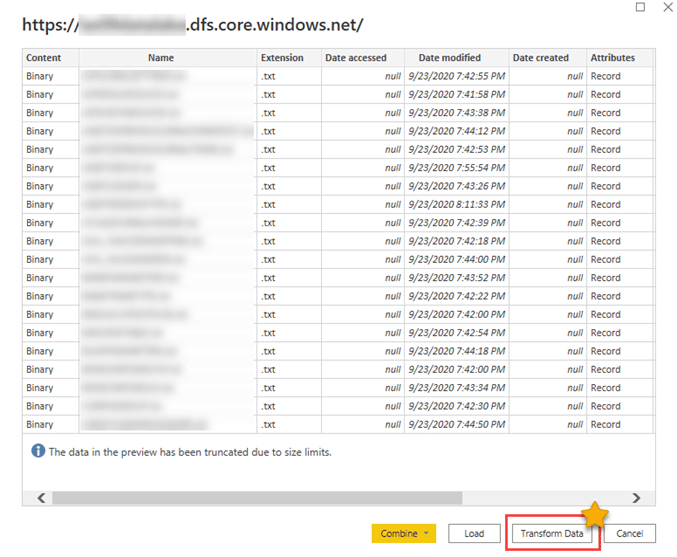

As we move forward using File System View, we’ll see our available data present in a way detailed in the picture below.

Luckily, if you are seeing parsing issues with your data, you can address this by editing the M Query that gets created natively by Power BI Desktop when this data query is initially created. For that, let’s go to Power Query Editor by selecting Transform Data.

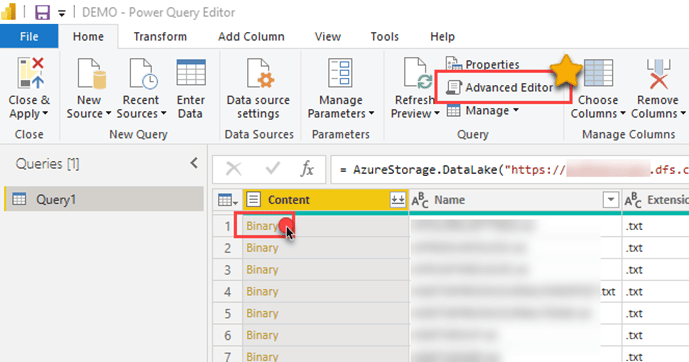

Once in the Power BI Query Editor, we’ll look at all the available files (.txt/CSV) in our ADLS that we can use. Locate the record that is the table of data we want to use, whether that means scrolling and searching, or using some filtering functionality. After locating the record, click on the "Binary".

Power BI has some great automation that will occur by importing the data of that file:

- parsing

- promoting headers

- changing data types of fields.

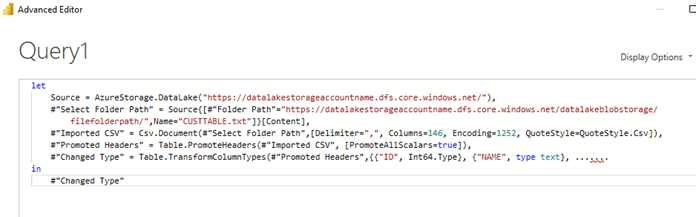

If we open our Advanced Editor, we can see the detail of these steps applied.

Let’s break down what is happening in the steps we see here:

- Source – shows our selected ADLS

- #"Select Folder Path" – is what file we are opening when clicking Binary, in this example, getting the data of our CUSTTABLE

- #"Imported CSV" – this is Power BI bringing in the data and taking default parsing actions on it

- #"Promoted Headers" – another auto step, the first row from the parsing is promoted to headers

- #"Changed Type" – also auto, Power BI takes an educated guess in changing all the fields’ data type to what it should be

This brings us to our potential issue and where we can put our fix into action. At times, the default parsing Power BI completes doesn’t work with our data. If special characters, line or paragraph breaks, double quotes at end of fields (maybe designating inches), and escape characters are not handled properly—they all can confuse the parsing and cause our data to be imported incorrectly.

A common result from parsing issues is data getting ‘shifted’ over a column or two. For example, you might now suddenly we have State data in our Zip Code column for certain records. We call that bad data and it is a headache.

Manually scrolling through and trying to correct this bad data will lead to tasking manual work. To understand where our parsing went wrong, let’s dig a little into the parsing step of #"Imported CSV" and what is going on in this Csv.Document function:

- Delimiter="," – this is what delimiter is used for parsing your CSV data; the comma is standard, but if it’s something else (ex: ";" or "|"), certainly update it here

- Columns=146 – how many columns are in your CSV data; Power BI usually does a pretty good job at identifying this

- Encoding=1252 – this is the code page identifier used for parsing; this may default to 1252 - ANSI Latin 1; Western European (Windows), but your ADLS CSV file maybe actually be stored in 65001 - Unicode (UTF-8), so change to 65001 as needed

- QuoteStyle=QuoteStyle.Csv – this is how quoted line breaks are to be handled for parsing; this may default to QuoteStyle=QuoteStyle.None, but we’ve seen better luck with QuoteStyle=QuoteStyle.Csv, so change as needed

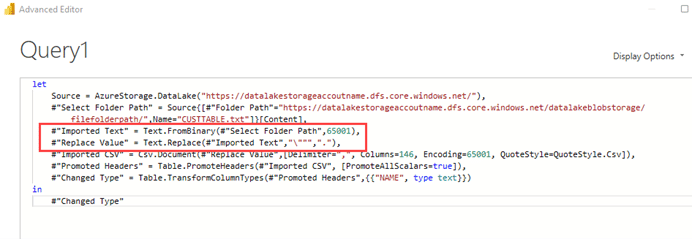

The other trick around handling these ‘special situations’ is a replacement solution. To complete this, we need to complete it before Power BI starts doing its parsing magic. Here’s where our interject happens of placing these steps within our code:

#"Imported Text" = Text.FromBinary(#"Select Folder Path",65001),

#"Replace Value" = Text.Replace(#"Imported Text","\""","."),

These steps complete a text extract from the Binary first, which allows for the replace function to be used, mainly for that ‘double quotes at end of a field’ situation. After this is completed, we’ll see much better success when Power BI does its parsing!

Also note, the auto #"Changed Type" step that Power BI creates—you most likely won’t need all the data fields within your reporting, so only keep the change types for the fields you keep.

Hopefully, this parsing breakdown assists in explaining what Power BI is doing when you bring in that ADLS CSV non-CDM file. More importantly, these highlighted intervention edits should provide you with better data to start your report out on the right foot!

Questions?

We are happy to help! Please get in touch with us so we can help you optimize your Microsoft business solutions!

Under the terms of this license, you are authorized to share and redistribute the content across various mediums, subject to adherence to the specified conditions: you must provide proper attribution to Stoneridge as the original creator in a manner that does not imply their endorsement of your use, the material is to be utilized solely for non-commercial purposes, and alterations, modifications, or derivative works based on the original material are strictly prohibited.

Responsibility rests with the licensee to ensure that their use of the material does not violate any other rights.